Home

Blog

Find & Send Cold Emails to 500 Unique Prospects Every Month for FREE.

Home

Blog

Adam Hossain

Published February 3, 2026

9 min

Try Oppora AI

Create Self-Running Agentic Sales Workflows like N8N just by chatting with AI

Get Started for FREE

Cold email still works in 2026, but only when you understand what “good” actually means.

This guide breaks down realistic cold email benchmarks for opens, replies, and conversions.

It explains why these numbers vary across industries and audiences, and shows how to evaluate campaign performance without relying on outdated averages or misleading industry reports.

Benchmarks don’t define success.They provide direction.

In 2026, cold email benchmarks help teams understand performance trends and set realistic expectations.

Used correctly, they offer context for evaluating results and identifying improvement areas without locking teams into fixed targets or outdated assumptions.

Benchmarks aren’t static.

Inbox algorithms constantly change how emails are filtered and prioritized, reshaping visibility and engagement.

Buyer behavior also shifts as inboxes become more crowded and buyers grow more selective over time.

Outreach tools evolve in parallel, improving targeting, personalization, and deliverability standards.

As these factors change together, performance baselines move.

Numbers that worked two years ago may no longer reflect today’s outreach environment or realistic expectations.

Regular reassessment keeps teams aligned with current inbox conditions and buyer behavior.

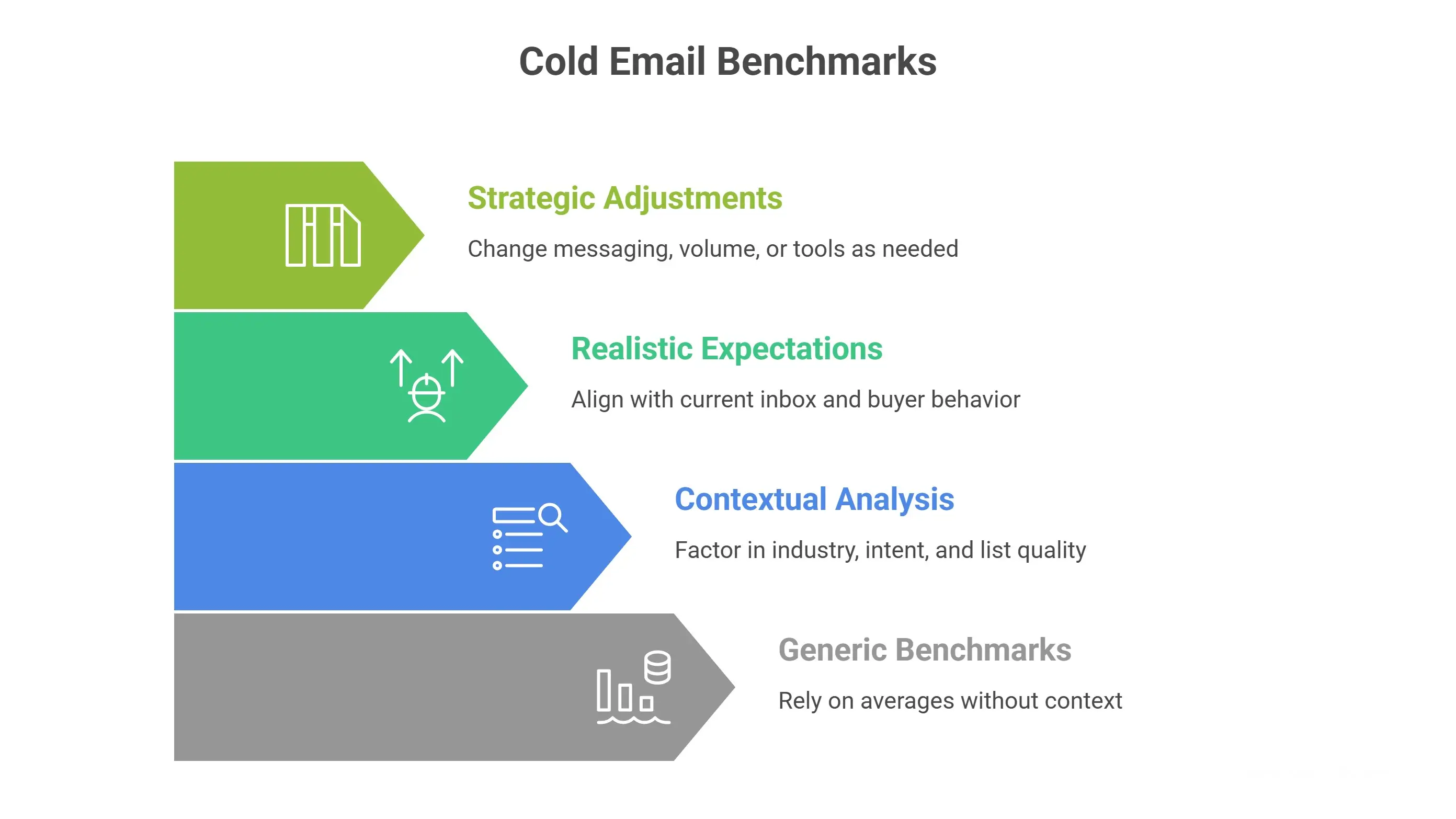

Many teams rely on generic benchmark averages.

They compare results without factoring in industry context, buyer intent, or list quality.

This leads to misleading conclusions about campaign performance and health.

What appears to be underperformance may actually be normal for a specific audience, offer type, or awareness stage.

Without proper context, teams risk changing messaging, volume, or tools unnecessarily, instead of addressing the real constraints shaping their outbound results over time as scale and complexity increase across campaigns globally today.

Open rates still matter in 2026.But they no longer define success.

They mainly indicate deliverability and initial relevance.

This section shares realistic cold email open-rate ranges for 2026 and explains how to interpret them correctly.

You’ll learn when open rates signal a real issue and when they don’t, so you can focus on metrics that actually drive the pipeline.

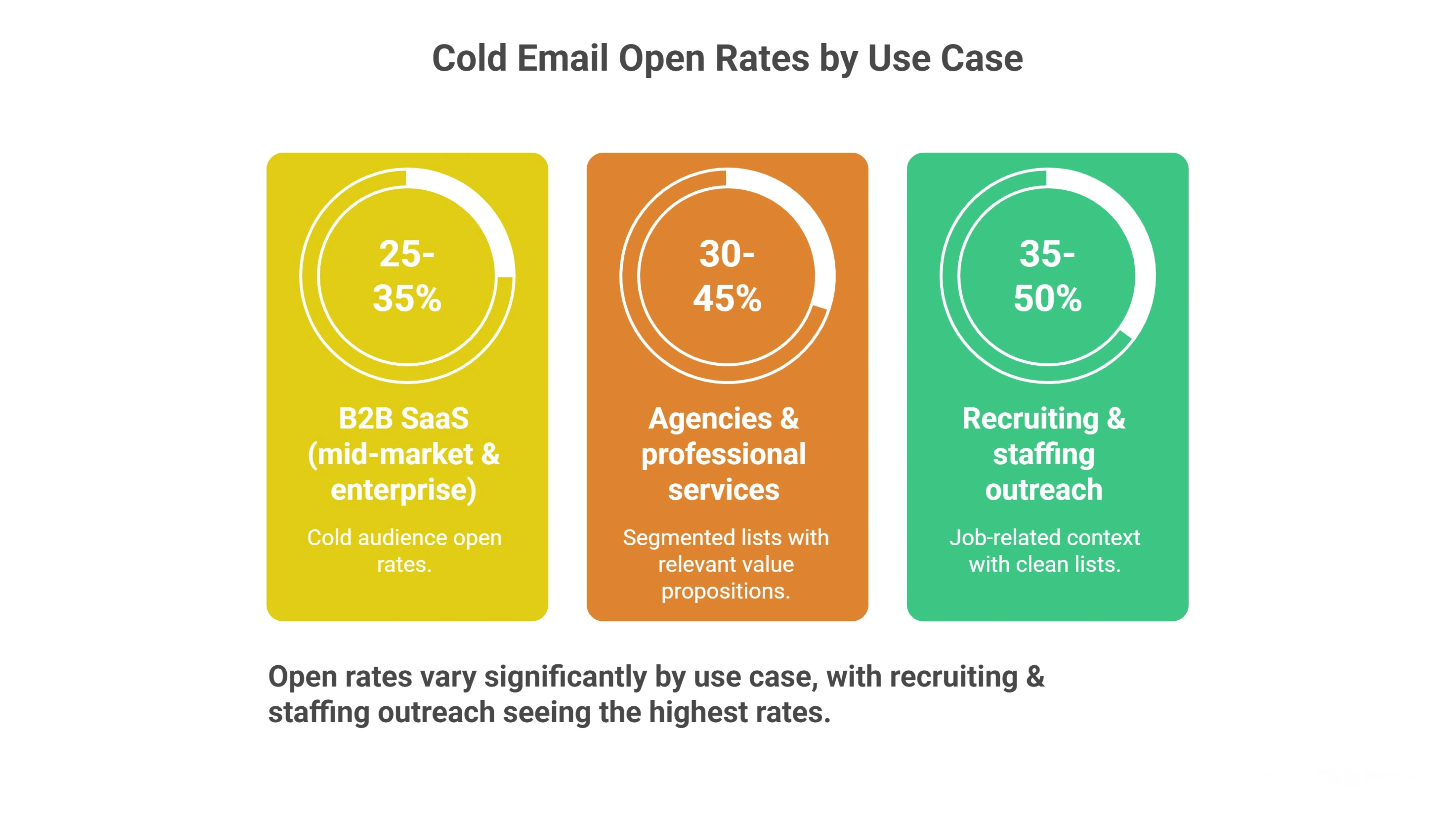

Cold email open rates vary significantly by audience type and targeting context.

Inbox competition, relevance, and deliverability all affect whether a prospect opens a message.

Segmenting open rates by use case provides a more accurate baseline than relying on imprecise industry averages.

Comparing open rates within similar audience contexts helps teams separate deliverability issues from targeting or relevance gaps, instead of misjudging performance using broad industry averages.

Open rates mainly reflect inbox placement and subject-line relevance.

They indicate whether emails are delivered and noticed by recipients.

High opens do not guarantee replies, conversations, or booked meetings.

Messages may be opened briefly out of curiosity, then ignored.

Because of this, open rates function best as a diagnostic signal.

They are not a reliable measure of campaign effectiveness or buyer intent.

When used alone, they can mislead teams evaluating performance, optimization, and true outbound impact over time and scale.

In 2026, cold email reply rate benchmarks matter more than opens or send volume alone.

They show whether your message resonates with the right audience—not just whether emails are delivered or seen.

The real value comes from understanding realistic reply-rate ranges and evaluating them through audience context, intent, and reply quality, rather than chasing raw percentages.

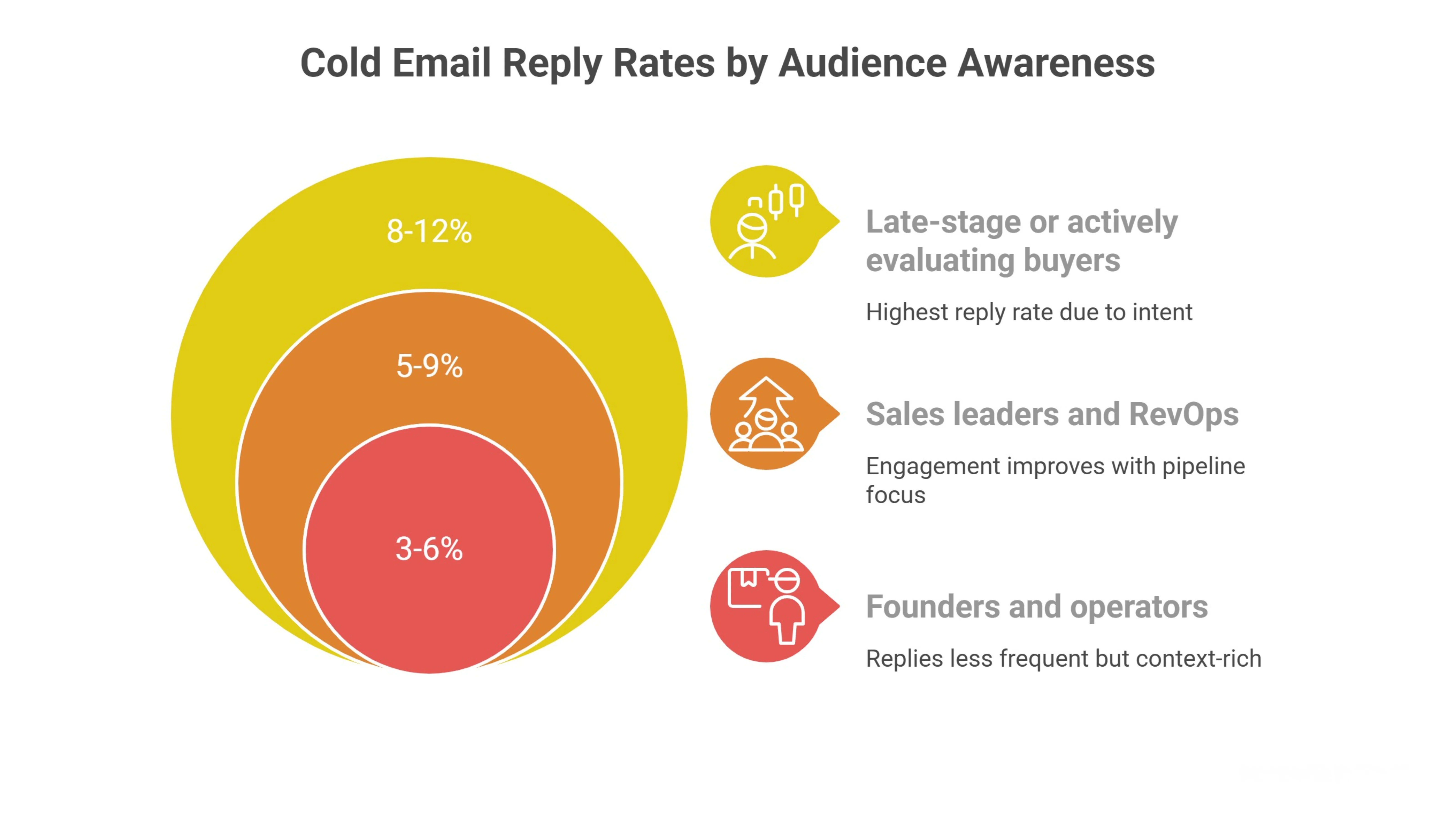

Reply rates vary based on who you contact and how aware they are of the problem you solve.

Audience maturity and urgency strongly influence whether prospects respond and how quickly conversations move forward.

Comparing reply rates within similar awareness levels provides more reliable benchmarks than relying on broad industry averages alone.

Not every reply represents progress in cold outreach.

Positive replies show clear interest or intent to take a next step.

Neutral replies acknowledge the message without urgency or buying signals.

Negative replies indicate disinterest, poor fit, or timing issues.

Separating replies by quality helps teams assess real traction.

This approach shifts measurement toward meaningful conversations, not inflated reply counts that overstate success.

Over time across different audiences and stages of outreach, while supporting clearer decisions on targeting and follow-up strategy.

A low reply rate doesn’t automatically signal failure.

Results should be viewed relative to audience maturity and intent.

Outreach goals and the conversation stage matter significantly.

Early-stage awareness campaigns often produce fewer replies by design.

This is expected when prospects are not actively evaluating solutions.

Consistently low engagement across campaigns deserves closer attention.

It may indicate issues with targeting accuracy.

Unclear messaging can also suppress responses.

Weak offers reduce motivation to engage.

Contextual analysis helps teams identify real constraints before reacting.

Measured adjustments improve reply outcomes sustainably over time.

Without unnecessary reactive changes.Reply rate is the clearest signal of message relevance in cold outreach.

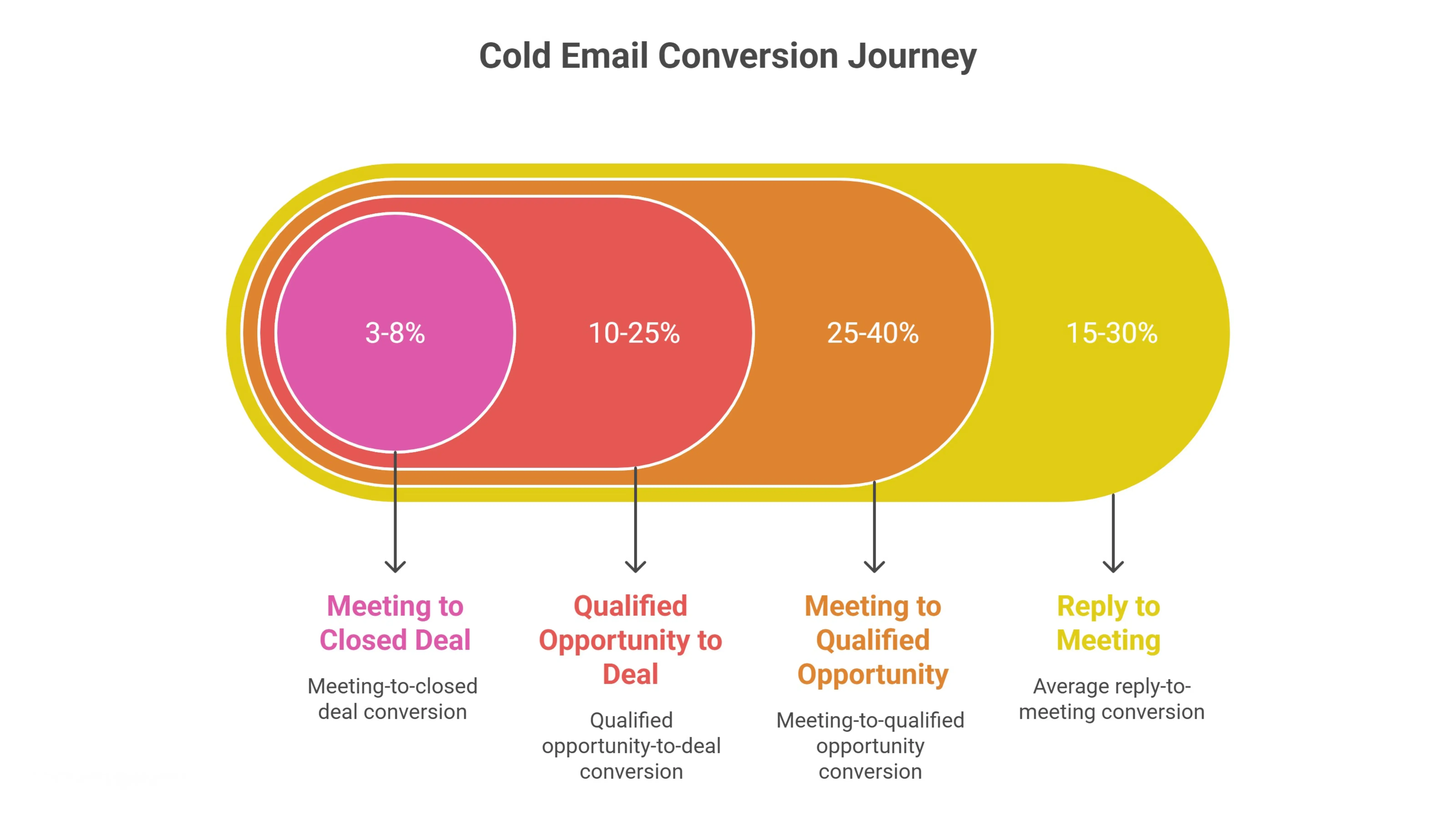

Conversion rates show whether cold email actually creates a pipeline.

Here, the focus shifts from engagement to outcomes tracking how replies turn into meetings and how meetings convert into deals.

It also highlights where most outbound campaigns lose momentum and why strong engagement doesn’t always translate into qualified opportunities or revenue.

Not every reply turns into a booked meeting.

Momentum is often lost when qualification is weak, next steps are unclear, or follow-ups are delayed.

Reply-to-meeting benchmarks help teams understand whether breakdowns happen after engagement rather than during targeting or messaging.

Booking meetings is only one step in the conversion journey.

A significant share of meetings fail to progress due to poor fit, weak intent, or unclear qualification.

Downstream benchmarks help teams understand where value is lost after meetings and prevent overestimating pipeline strength based on meeting volume alone.

This stage clarifies how qualification standards and follow-up quality shape real pipeline outcomes

Benchmarks don’t exist in isolation.

In real campaigns, cold email benchmarks are shaped by execution choices, not just messaging.

List quality, sending patterns, and inbox management often determine whether results land above or below average, making accurate diagnosis essential before adjusting copy, volume, or tools.

Understanding these factors prevents misinterpretation and helps teams focus on improvements where impact is measurable consistently.

List quality influences every stage of cold outreach.

Highly targeted, intent-aligned prospects consistently outperform large, generic databases.

When buyers already recognize a problem or actively explore solutions, opens and replies increase naturally.

Buyer readiness, therefore, impacts benchmarks more strongly than subject lines, templates, or minor copy changes.

Without intent alignment, even well-written emails struggle to generate meaningful responses across industries and roles.

This gap explains why similar campaigns produce different outcomes using identical messaging across varied market contexts.

Deliverability directly affects how benchmarks appear in practice.

Inbox placement, send timing, and pacing influence whether emails are seen or ignored.

Poor warm-up, aggressive volume, or inconsistent sending patterns reduce opens, replies, and conversions.

These issues persist even when targeting and messaging are otherwise strong.

Maintaining healthy infrastructure requires gradual scaling, consistent schedules, and ongoing monitoring to protect long-term performance.

Ignoring these basics quietly lowers benchmarks while teams chase fixes in copy or offers instead of root causes.

As outbound scales, teams often miss cold email benchmarks because execution breaks.

Domains burn faster than expected, lists decay over time, and sending patterns become inconsistent:

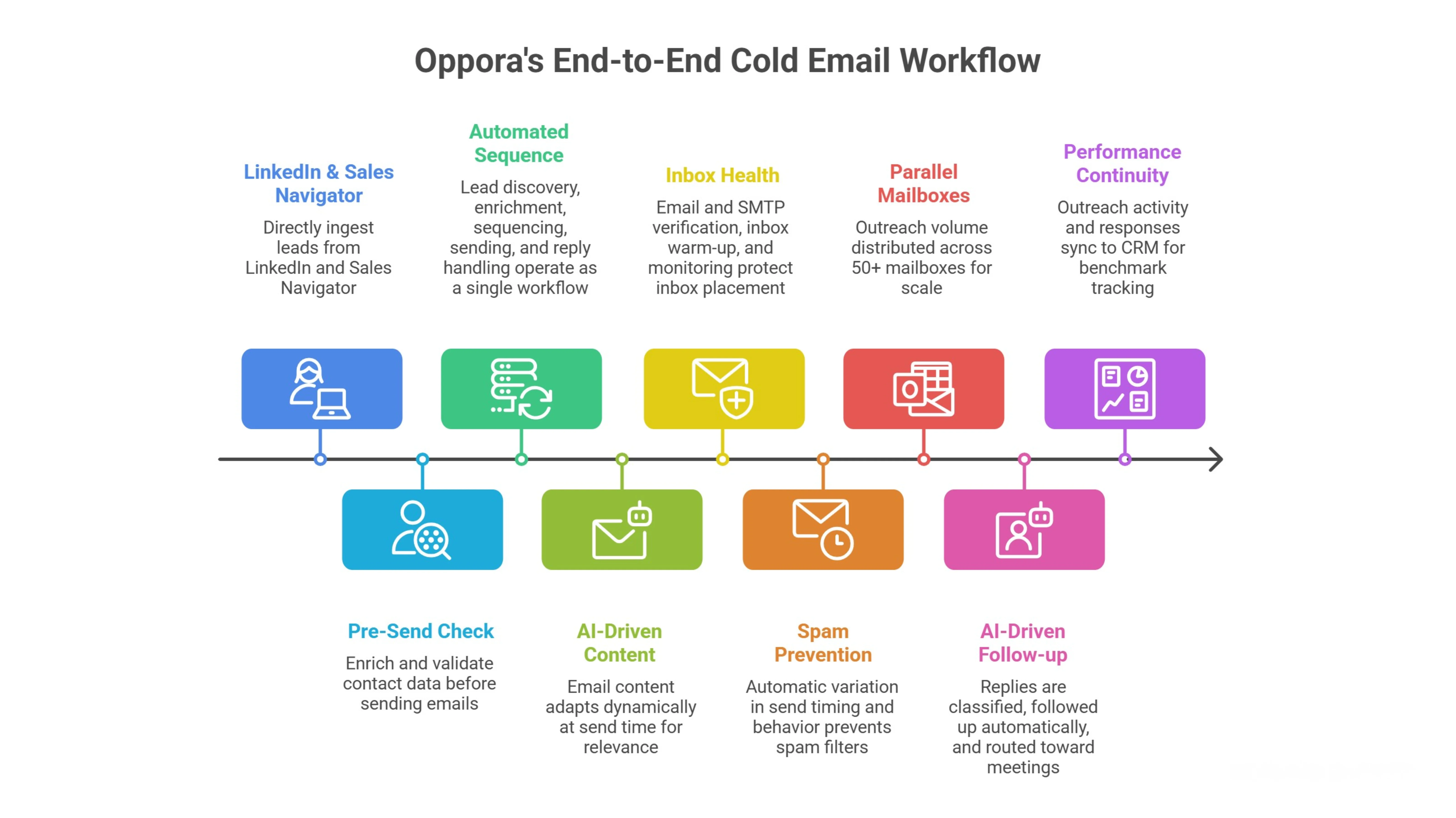

Oppora addresses this by keeping the entire outbound workflow connected.

It operates as an agentic outbound platform, similar to n8n but for sales.

Teams define objectives conversationally, and the system builds an end-to-end flow for sourcing, enrichment, sequencing, sending, and reply handling without stitching together multiple tools.

Below is how Oppora supports cold email benchmarks through a structured, end-to-end approach.

By keeping each step connected, outbound teams can maintain realistic cold email benchmarks even as scale and complexity increase

Cold email benchmarks in 2026 reward relevance, intent, and consistency not volume alone.

When teams improve the right metrics at the right stages, results become predictable.

Even average benchmarks drive pipeline growth when targeting, sending, and follow-ups stay consistent.

That consistency is hardest to maintain across sourcing, sequencing, and reply handling, which is why teams often rely on connected workflow platforms like Oppora to reduce execution gaps.

And if you want to give it a try, you can sign up for the platform for Free!

Wait at least 2–4 weeks of consistent sending. This allows deliverability, reply patterns, and audience behavior to stabilize before drawing conclusions.

Yes. Warm or problem-aware audiences usually respond more. Fully cold audiences may reply less initially, but still perform well with strong relevance.

Yes. Volume matters less than targeting, deliverability, and follow-up quality. Smaller campaigns often perform more consistently.

Review monthly, not daily. This avoids reacting to short-term noise and helps spot real performance trends.

Yes. Geography, industry maturity, and buying behavior all affect opens, replies, and conversions.

Summarize with AI

Share